Managing Data in DeFi: The Emergence of a Decentralised AWS

What will minimum viable decentralisation mean for data infrastructure providers?

Welcome to another edition of the DeFi Mullet newsletter! In this newsletter, I hope to cover the arguably boring world of the most anticipated collab of the decade: Fintech x DeFi (ft. policy, markets, tech, product, etc).

If you are one of the fine folks who get a thrill from these topics, press subscribe below!

Take a deep breath and visualise…

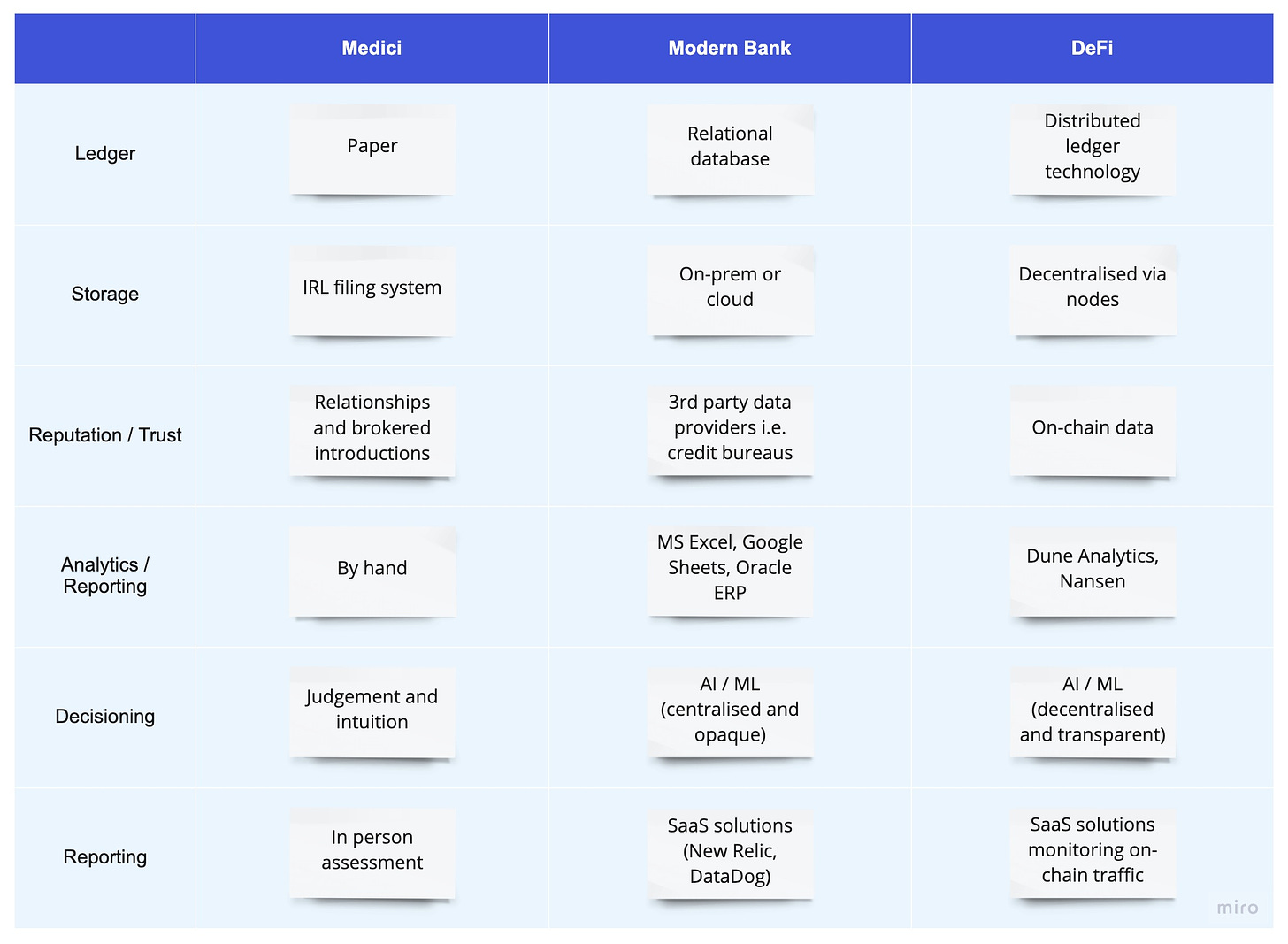

As we sit in our bedrooms, offices, and hybrid workspaces we can often forget the level of abstraction we are operating at when discussing financial services. We are far from the days of the invention of double-entry bookkeeping in 13th-century Europe. As investors and builders, we often operate at several layers of abstraction from the actual transmission of physical value facilitated by the machinations of financial services. Modern financial services applications are premised upon data and lots of it. Everything we do relies on data storage, transmission, and computation. It is prescient that we seek to understand the role of data in decentralised finance, reflecting on the role data has played in the development of TradFi. In DeFi, more than ever, data is the fuel powering everything we do.

Horizons of Data in Financial Services

The major data building blocks in DeFi so far

Node access:

In blockchain-based systems, nodes are what enable direct access to the great distributed state machine. Nodes are used for directly writing to and reading from a blockchain network. Using nodes to read state is the most real-time understanding of a network an application can have (see here for further information on the types of nodes in Ethereum: light node, full node, and archive node). It is acceptable for applications to directly read from nodes whilst the overall size of a blockchain is still manageable but as time goes on, and the size of a blockchain increases, it no longer becomes scalable to continue along this path. Node access is a crucial component of understanding state and the path forward to scale is captured in many of the themes we will discuss below.

Players: Infura, Alchemy, Syndica, Blockdaemon, Ankr, Quicknode, Pokt, Chainstack, Coinbase Cloud, Getblock, NowNodes, InfStones, WatchData

Indexing, Querying, Databases:

As the size of a blockchain becomes increasingly large it becomes necessary to index information so as to maintain performant client-facing applications. Raw blockchain data can be ingested, processed, and indexed so it can be served cleaner and faster to client-side applications. One use case where indexing excels is helping client-side applications swiftly serve user-level data i.e. all associated information relating to a single user account. This is incredibly important so as not to negatively impact response times in the front end, maintaining excellence throughout the overall user experience.

Players: The Graph, Luabase, Tableland, Covalent, Coherent, neeva.xyz, Goldsky, Vybe Network, Kyve, flipsidecrypto.xyz

Decentralised Storage:

DeFi is wildly data producing and unsurprisingly has an ongoing appetite for large amounts of data storage. Centralised providers such as Amazon, Google, and Microsoft own and maintain gargantuan data centres that store incredible amounts of data. Conversely, decentralised storage applications encrypt data, break it up into smaller pieces, and distribute it for storage across a variety of peer-to-peer storage providers. It is important to note that decentralised storage does not exclusively relate to the storage of a ledger; it also includes decentralised file storage. Further, with respect to Ethereum, as the size of the chain becomes increasingly large it becomes necessary for decentralised storage providers to serve and maintain the full transaction history of the ledger:

The purpose of the Ethereum consensus protocol is not to guarantee storage of all historical data forever. Rather, the purpose is to provide a highly secure real-time bulletin board, and leave room for other decentralized protocols to do longer-term storage. The bulletin board is there to make sure that the data being published on the board is available long enough that any user who wants that data, or any longer-term protocol backing up the data, has plenty of time to grab the data and import it into their other application or protocol

Players: Filecoin, IPFS, Ceramic, Arweave, Storj, Swarm, Pinata, Web3.storage

Decentralised Compute:

As highlighted in Moxie’s first impressions of web3, there is a significant dependency on centralised server-side compute in blockchain-enabled applications. As builders ponder the question of what does decentralisation mean for us and our users it becomes apparent that many folks will have a desire for including decentralised compute in their data infrastructure stack. The primary method for facilitating decentralised compute is via a marketplace with an underlying tokenomic model that manages supply/demand incentives i.e. Akash and Golem.

Players: Akash, Aleph, Golem, Cudo Compute, iExec, Internet Computer

Oracles & Data Marketplaces (on-chain & off-chain):

Oracles provide data streams that can be integrated into smart contracts and user-facing applications. Oracle data can be both on-chain (i.e. AMM swap rates, contract calls, multi-chain data feeds) and off-chain (i.e. USDETH centralised exchange prices, government interest rates, stock prices, commodity prices, weather events). Further, by utilising token primitives, it has become possible to spin up decentralised marketplaces for datasets that maintain incentives for both data providers and data users. These data marketplaces enable the further introduction of off-chain data into decentralised finance.

Players: Chainlink, Redstone, Ocean Protocol, Data Union, Flux Protocol, Pyth, Dia Data

Performance Monitoring:

With increased distribution and scale, smart contracts experience considerable volume flows (i.e. read and write contract calls). It is important that teams and market participants are able to set up comprehensive monitoring solutions to alert when there are deviations from expected behaviour: significant increases or decreases in volume, strange contract calls, or large unexpected transfers of assets. The swift identification and resolution of performance issues will become increasingly important as more users continue to onboard into DeFi/Web3 and user protection becomes more frequently discussed.

Players: blocknative, tenderly, Blocktorch

Analytics / Data Science & ML:

There is already a multitude of analytics providers in DeFi/web3 that have embraced the open nature of many of today’s major blockchains. Analytics providers enable the structuring and visualisation of large amounts of on-chain data. In many cases, analytics providers take raw transaction data and structure it so that data can be queried and analysed using languages already familiar to data analysts / scientists: SQL and Python. We are increasingly seeing analytics providers branch out and begin offering API services in competition with subgraph indexers such as The Graph.

Players: Dune Analytics, Amberdata, Metrika, Nansen, Token Terminal, Coinmetrics

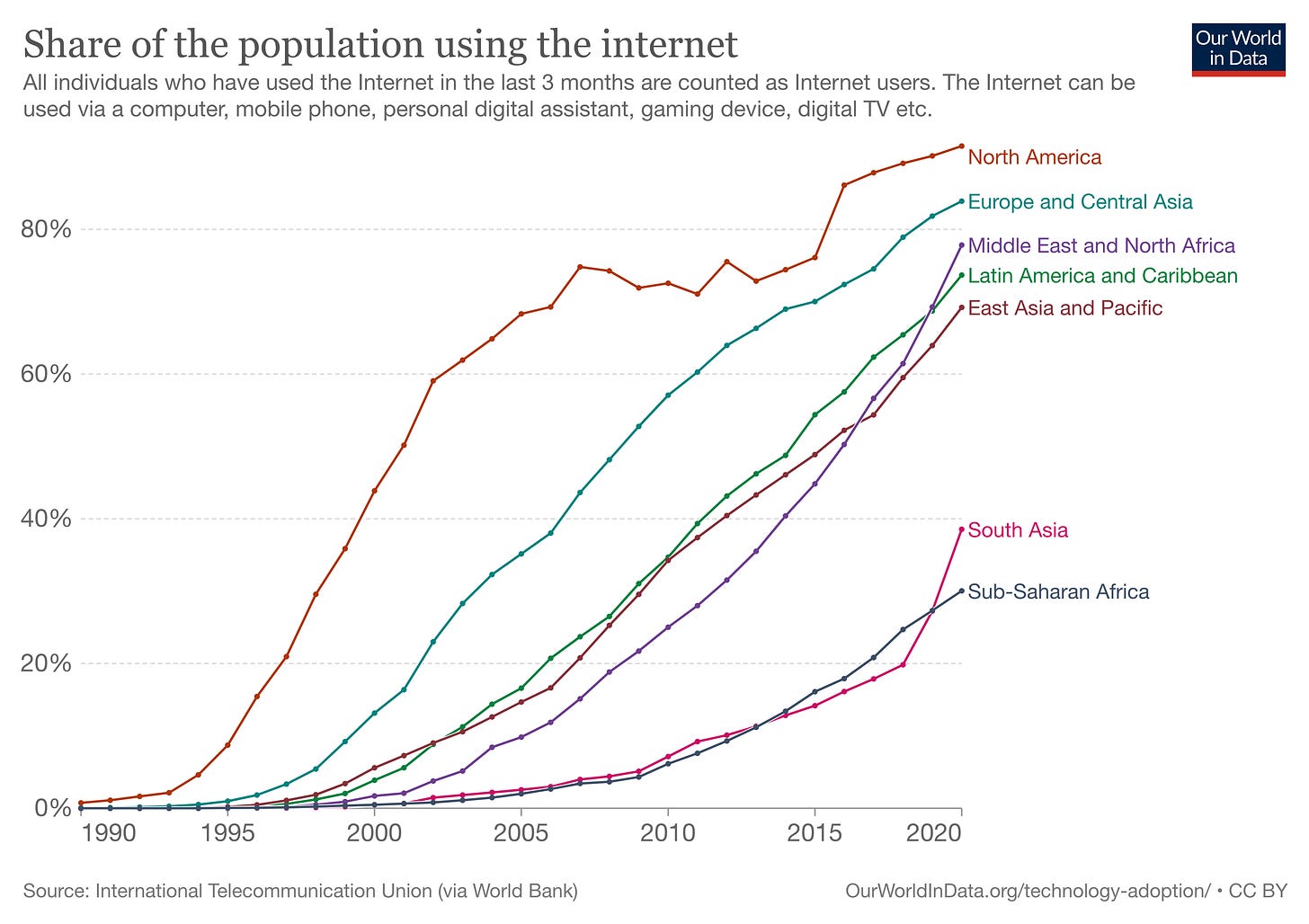

It is easy to overlook the scale of what this can become

It is useful to look at data theses with reference to web2 to better understand the enormity of what needs to be built in DeFi. A favourite to reflect on is the Bessemer Venture Partners data infrastructure roadmap. Bessemer does a fantastic job of defining the narrative:

The success of a modern business, from small-to-medium companies to Fortune 500 enterprises, is increasingly tied to the ability to glean valuable insights or to drive superior user experiences from its data. As the technical barriers to making use of data have gone down in recent years, organizations have realized that data infrastructure is fundamentally tied to accelerating product innovation, optimizing financial management, surfacing customer insights, and building flexible and nimble operations to outmaneuver the competitive landscape. After all, global organizational spending on infrastructure has topped more than $180 billion and continues to grow every year.

If we believe that DeFi will play a paradigm-shifting role in the decentralisation of modern financial services, inter alia, then it should also be held that there is a tremendous opportunity for data infrastructure businesses to solve the unique challenges faced in blockchain-based applications.

Bessemer clearly outlines the breadth of data infrastructure services and the depth of players in each opportunity space. It is safe to say that we are yet to reach a similar stage of maturity in DeFi. We are very much at the starting line and it is imperative we take seriously the opportunity we have before us. Anyone who is serious about the role of DeFi in the future of financial services should also understand the importance of enabling the supporting data infrastructure at scale.

The second macro consideration worth reflecting on is the enormous impact Amazon Web Services has had on the world of cloud infrastructure. Listening to the latest Acquired episode focused on AWS, it is clear there are many parallels between the early days of AWS and what is currently taking shape in DeFi. The main parallels are storage, databases, and compute. Amazon’s first public-facing foray into the world of cloud infrastructure was the release of Amazon S3 as a storage solution for things such as images, videos, and documents; we can see IPFS as the parallel in the decentralised world. Amazon’s second cloud offering was Amazon EC2 which allowed users to rent virtual computers that could be used to run their own applications; we can see Akash, Aleph, and Golem as the early emergents for enabling decentralised compute. The third parallel is that of databases of which Amazon RDS is the stalwart; we can see Tableland emerging as the DeFi equivalent. Decentralised data infrastructure is unbundling AWS and establishing decentralised primitives as an alternative to existing centralised solutions.

Charts to ponder…

What needs to go right to make decentralised data infrastructure at scale a reality?

Reflections

It is important we accept where we are: at the beginning, forging the foundations of what are to become systemically important pieces of infrastructure. With that in mind, we can reflect on why continuing to build and invest in data infrastructure serving DeFi is so incredibly important for the next 3-5 years:

Facilitate scale

Enable increasingly complex services at the application layer

Define what Minimum Viable Decentralisation actually means

Continue to improve the overall user experience of DeFi/web3

Create optionality and flexibility for builders

Investors and builders need to ask themselves: what does decentralisation mean for us and our customers? Is it enough to simply utilise decentralised ownership primitives or do we need to consider decentralisation throughout other parts of our data infrastructure stack?

If either of these questions results in requiring decentralised data infrastructure then the enormity of what is needing to be built is a staggering opportunity.

If you are looking to build or are investing in what’s next, would love to chat - please reach out via DM on Twitter or reply via email 🙏

Additions to include from the latest YC (Summer 22) cohort:

Probably Nothing Labs: https://www.probablynothinglabs.xyz/

Blockscope: https://www.blockscope.tech/